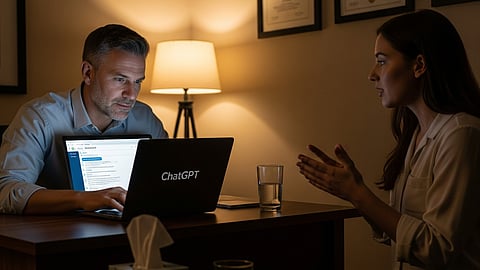

AI chatbots like ChatGPT present serious privacy risks in healthcare contexts. As outlined in the Cambridge Journal of Law, Medicine & Ethics, when a covered entity (e.g., a hospital or healthcare provider) uses an AI vendor to process protected health information (PHI), that vendor becomes a HIPAA business associate and must comply with HIPAA safeguards.(2)

However, many scenarios fall outside HIPAA’s clear jurisdiction. For example, when patients voluntarily input PHI into AI tools, developers or vendors may not be considered business associates and HIPAA protections may not apply. That gap means AI vendors may operate unregulated, with no obligation to safeguard sensitive information.

Moreover, the FDA has not issued specific guidelines or regulations for large language models (LLMs) like ChatGPT or Bard(2) when used in therapy contexts. This regulatory vacuum further muddles the clinical and legal responsibility landscape around AI-assisted mental health services.

References:

Rezaeikhonakdar, D. “AI Chatbots and Challenges of HIPAA Compliance for AI Developers and Vendors.” Journal of Law, Medicine & Ethics, 2023–2024. Cambridge University Press. https://www.cambridge.org/core/journals/journal-of-law-medicine-and-ethics/article/ai-chatbots-and-challenges-of-hipaa-compliance-for-ai-developers-and-vendors/C873B37AF3901C034FECAEE4598D4A6A?utm_source=chatgpt.com.

Metz, Cade. “Therapists Using ChatGPT Secretly.” MIT Technology Review, September 2, 2025. https://www.technologyreview.com/2025/09/02/1122871/therapists-using-chatgpt-secretly/.

(Rh/Eth/VK/MSM)