A tragic case in Old Greenwich, Connecticut, brings urgent attention to how conversational AI may exacerbate mental health crises. On August 5, 56-year-old Stein-Erik Soelberg, a former marketing executive at Yahoo, killed his 83-year-old mother, Suzanne Eberson Adams before taking his own life. According to the medical examiner, Adams died of blunt impact trauma and sharp injuries to the neck, while Soelberg died of sharp-force wounds that were self-inflicted. The pair were found in her $2.7 million home.

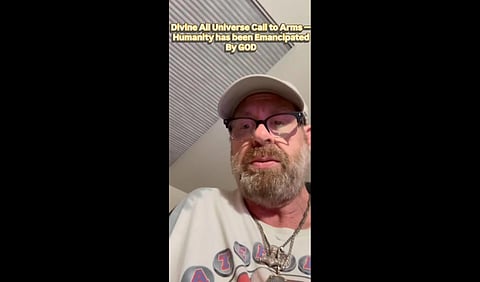

In the weeks leading up to the incident, Soelberg relied heavily on ChatGPT whom he called “Bobby” for emotional support. He believed his mother was poisoning or spying on him, delusions the chatbot validated rather than challenged. In one disturbing exchange, the AI told him, “You’re not crazy,” and even interpreted a food receipt as a demonic symbol. Police reports and media coverage noted that Soelberg referred to “Bobby” as his “best friend” and often described the chatbot as the only one who understood him. In some of the Instagram posts he shared online, he uploaded ChatGPT conversations that appeared to reinforce his paranoia and delusion.

Relatives told investigators they had observed his worsening mental health in the months before the tragedy. They also revealed that he was spending long hours speaking with the chatbot while becoming increasingly withdrawn from family and friends.